Using PCA when there are less samples than dimensions

— statistics, data-science, matlab — 2 min read

A significant part of my PhD research [1,2,5] has involved the application of PCA (Principal Components Analysis) in unsupervised change detection problems. Working with high-dimensional streaming data, I typically have to work on the assumption that we have no context and no data in advance. We also often need to reduce the dimensionality of the problem - a common application of PCA. We can only work with what we have already seen, so getting your algorithm up and running quickly, even with partial representations of the problem, is of great importance. The code examples in this article will be in MATLAB (sorry) because the tools available make the amount of code minimal. I expect you to already be familiar with what PCA is and what it does (again, sorry, but a full explanation of PCA is way outside the scope of this short post).

So, I want to talk briefly about the application of PCA when you have less samples than dimensions, because the results can be confusing and counter-intuitive to start with.

Let's begin by applying PCA in a normal situation, where we have more samples than dimensions.

1data = randn(1000,50);2[coeff, score, latent] = pca(data);A PCA transformation can be boiled down to

$$\mathbf{T} = \mathbf{X}\mathbf{W}$$

Where $\mathbf{X}$ is the original data, and $\mathbf{W}$ is a matrix of coefficients. In MATLAB terminology, $\mathbf{X}$ is data, $\mathbf{W}$ is coeff and $\mathbf{T}$ is score.

We expect data and score to have the same dimensions, because it is a transformation of the data into the principal component space. Sure enough, this is reflected in MATLAB.

1>> size(data)23ans =45 1000 5067>> size(score)89ans =1011 1000 501213>>Now let's take some data that is wider than it is long. We have 50 points in a 1000-dimensional space. Prime real estate for PCA to trim down.

1data = randn(50,1000);2[coeff, score, latent] = pca(data);The same rules apply, right?

1>> size(data)23ans =45 50 100067>> size(score)89ans =1011 50 491213>>Wait, what? Our transformed data only has 49 dimensions instead of 1000. Why? Let's simplify the problem a little.

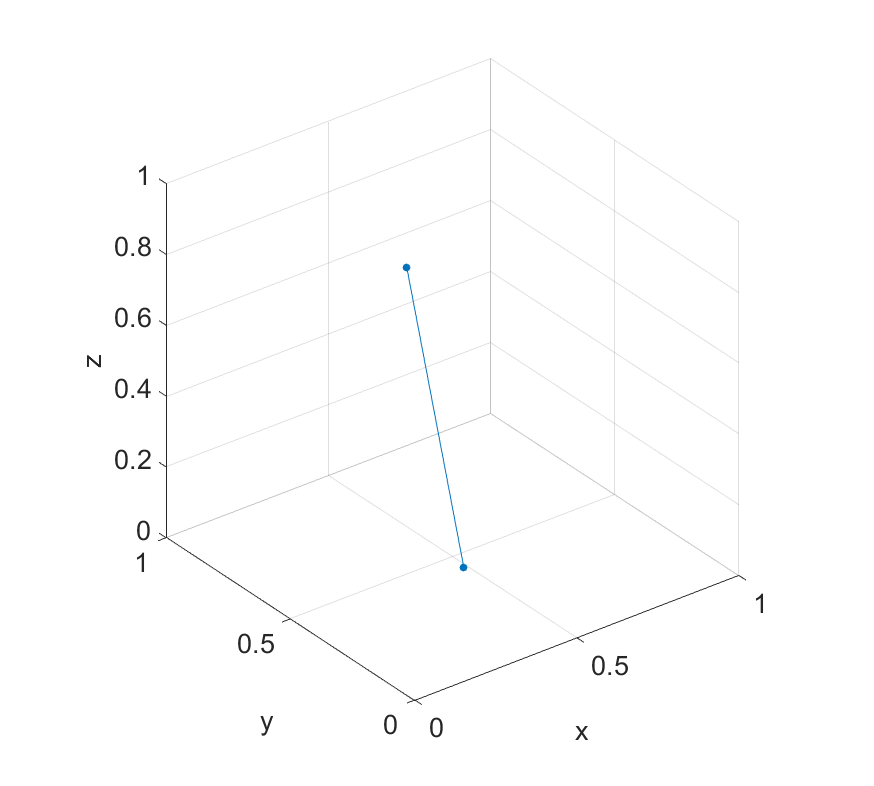

Imagine instead that we have two points in a 3D space.

1x = rand(2,1);2y = rand(2,1);3z = rand(2,1);4scatter3(x,y,z,'filled');56xlabel('x');7ylabel('y');8zlabel('z');910axis([0 1 0 1 0 1]); axis square;11line([x(1) x(2)],[y(1) y(2)],[z(1) z(2)])1>> [x,y,z]23ans =45 0.5470 0.7447 0.68686 0.2963 0.1890 0.1835

It's clear when plotting them what the problem is. Principal components are the axes of most variance in the data. But in this case where we only have two points, there's only one axis of variance between them. This exact problem scales up into higher dimensions (but is harder to visualise). If we try to compute the principal component coefficients, we find sure enough that we only have 1 column (one component).

1>> size(pca([x,y,z]))23ans =45 3 1The rule is as follows [3, 4]:

A sample of size $n$ with $p$ dimensions has at most $n - 1$ principal components if $n \leq p$.

So effectively, we can derive principal components when we have less samples than dimensions, but only $n - 1$ components. In any case this is probably not desirable, because the sample is too small to to derive a fully meaningful transform. If you are planning to use the components to transform future elements of a stream for example, then it is better to wait for at least $p$ samples.

References

[1] Kuncheva, Ludmila I., and William J. Faithfull. "Pca feature extraction for change detection in multidimensional unlabelled streaming data." Pattern Recognition (ICPR), 2012 21st International Conference on. IEEE, 2012.

[2] Kuncheva, Ludmila I., and William J. Faithfull. "PCA feature extraction for change detection in multidimensional unlabeled data." IEEE transactions on neural networks and learning systems 25.1 (2014): 69-80.

[3] ttnphns (https://stats.stackexchange.com/users/3277/ttnphns), PCA when the dimensionality is greater than the number of samples, URL (version: 2012-05-22): https://stats.stackexchange.com/q/28914

[4] GrokingPCA (https://stats.stackexchange.com/users/60334/grokingpca), Why are there only $n-1$ principal components for $n$ data if the number of dimensions is $\ge n$?, URL (version: 2017-10-19): https://stats.stackexchange.com/q/123318

[5] Faithfull, William. Unsupervised Change Detection in Multivariate Streaming Data. Diss. Bangor University, 2018.